|

Getting your Trinity Audio player ready...

|

Moore’s Law, a 1965 prediction by American engineer Gordon Moore, states that the number of transistors per silicon chip doubles approximately every two years, leading to increased computing power and often a reduction in cost.

The current AI revolution is driven by GPUs, with increased numbers enabling faster training of complex models and the development of larger, more powerful AI.

Elon Musk, CEO of Tesla and SpaceX and once the investor in OpenAI and Sam Altman, CEO of OpenAI, have been having fallout ever since OpenAI decided to become a for-profit company.

So, what did Elon Musk, once an investor in OpenAI, do?

Musk, through his AI startup, xAI, releases its latest AI model, Grok 3 on February 17, 2025. I can’t tell you how much impressive it is for the price.

Grok 3 is xAI’s answer to OpenAI, Google & DeepSeek

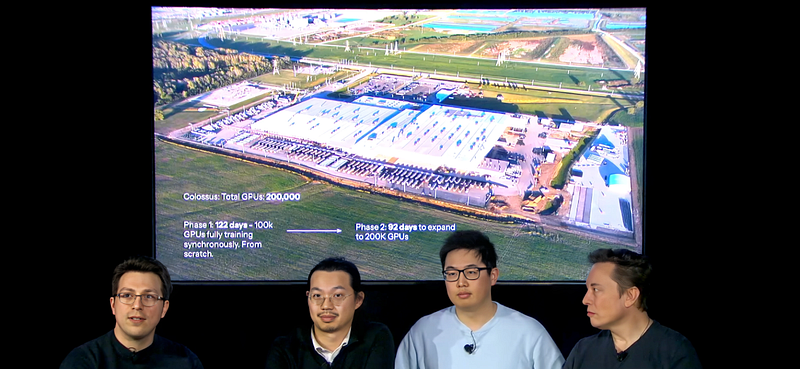

Grok 3 was built in just over eight months. Just eight months.

For eight months, the startup used their proprietary Colossus supercomputer cluster, which houses over 100,000 Nvidia Hopper GPUs training the model from datasets from various sources.

The result?

A model that’s not just quick but deep, designed to handle everything from math puzzles to science questions to coding challenges, all while keeping things real and conversational.

The intensive training has refined the chatbot’s reasoning abilities through large-scale reinforcement learning, allowing it to think for a few seconds to minutes before responding, just like GPT-4o and DeepSeek.

The model has shown significant improvements in reasoning, coding, mathematics, general knowledge, and tasks requiring it to follow instructions.

In fact, Elon Musk had claimed that Grok 3 was trained with 10x more compute than its predecessor.

Musk has also claimed that Grok 3 has been trained with extensive datasets from various sources, including filings from courts cases, which he claims to “render extremely compelling legal verdicts.”

Capabilities of Grok 3

Grok 3 has 2.7 trillion parameters, 12.8 trillion training tokens, and a massive 1 million tokens of context window, making it stand out from the crowd.

The model also demonstrated improved factual accuracy and enhanced stylistic control. Under the codename “chocolate”, an early version of Grok 3 topped the LMArena Chatbot Arena leaderboard, outperforming all competitors in ELO scores across all categories.

Users can ask Grok 3 to “Think,” or for more difficult queries leverage “Big Brain” mode for reasoning that employs additional computing. The startup describes the reasoning models as best suited for mathematics, science, and programming questions.

Personally, I have used Grok 3 and I found it excellent at reasoning for solving complex problems. The fact that it is free is what this model is attractive for wide number of users.

If this article provided you with value, please support me by buying me a coffee—only if you can afford it. Thank you!