|

Getting your Trinity Audio player ready...

|

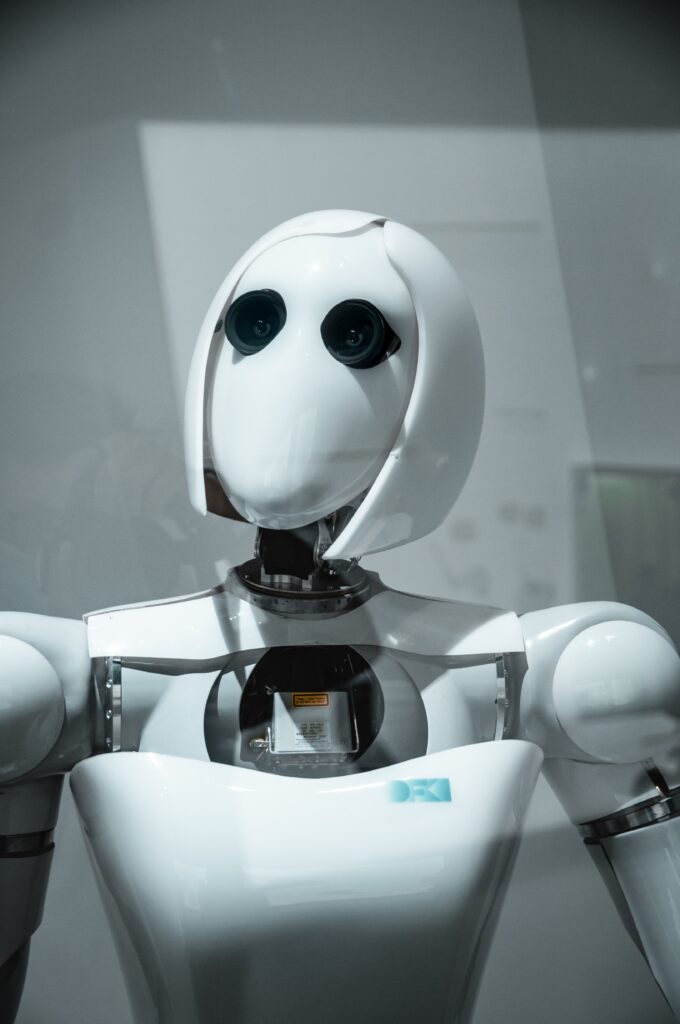

We all know the good and bad when it comes to basic morality. But what of science? What of the future? The yin and yang of science, of technology. You see Al everywhere. What would be the yin and yang of that?

As per Ancient Chinese philosophy, “yin and yang are a dualistic concept that describes how seemingly opposing or conflicting forces in the natural world may be complementary, linked, and interdependent, and how they can give rise to each other as they interrelate.”

The TED-Ed YouTube channel has a video on the hidden meanings of yin and yang which I would strongly suggest checking out.

I am sure many of you have read of Isaac Asimov’s novel ‘I, Robot.’ The book is a collection of short stories in which the author discusses the benefits and drawbacks of a fascinating future in which human-like robots roam the streets. Does this sound familiar? There is also a Hollywood movie starring Will Smith, based on the book.

AI contains that duality and it is called bias. Well, bias is defined as a negative character characteristic that offers a disproportionate weight in favour of or against one item, a person, or a group as compared to another.

The simplified version would be that we are so closed off to the idea of an all-inclusive culture that we infuse, unconsciously, and unintentionally, our own biases into the Al that we create. A positive from our end might be seen as a negative trait by someone from another culture. This provides, in essence, a disproportionate weight in favour of or against one thing, a person, or a group compared to another. As many of you might know, this creates what is called a systematic error. A statistical bias formed from an inappropriate population sample.

So, is bias a bad thing by definition? I see a programme operating in a specific manner and predict what will happen, whether or not it is typical of its regular process. I “know” what is and isn’t typical. This type of bias is required. This is the result of experience and domain expertise.

When politics enters the picture, the situation deteriorates. Politics of race, culture, language, concerns, and so on. These are the events that will precipitate Al’s dark era. There will be no Terminators or Skynet or Ultron. All that is generated to justify the development of one while opposing the development of another. We’ve already established boundaries around where we reside. Is it necessary to do the same with technology?

This conflict is as old as the human conflict between good and evil behaviour. This problem has no technological solution. Identifying and comprehending biases is difficult since we do not understand our own biases in the first place. Working with others, on the other hand, makes us conscious of our own biases as well as the biases in others.

We must treat Al with care. From a humanitarian perspective, we must ensure that Al’s prejudice does not damage or discriminate against individuals or groups of individuals.

That is the yin and yang of AI.

If you enjoyed reading this, you might also find the below article worth your time.